Architecture

Policy-driven execution that constructs runtimes in memory, enforces boundaries by design, and retires environments cleanly.

Executive Summary

BeacenAI is a policy-driven execution architecture that treats infrastructure as constructed, not maintained. Instead of administering long-lived operating systems and brittle configurations, BeacenAI assembles the exact runtime a workload needs—at boot, in memory, and from policy—then discards it when execution ends. The result is consistent environments, zero drift, minimized attack surface, and dramatically lower operational friction across servers, desktops, AI inference, and AI learning.

The Problem With Traditional Architecture

Modern infrastructure is no longer constrained by hardware availability. It is constrained by execution fragility: operating systems drift, updates introduce instability, long-lived nodes accumulate risk, and admin effort scales with fleet size. In AI environments, these weaknesses show up immediately as tail latency, jitter, inconsistent performance, failed training runs, and rising operational cost.

The BeacenAI Principle

Build the environment from policy. Destroy it when finished. BeacenAI replaces “configure and maintain” with “compose and verify.” At session start, BeacenAI evaluates hardware and context, pulls only what policy allows, constructs a minimal runtime in memory, executes within enforced boundaries, and retires cleanly—no remnants, no drift, no decay.

As a Server

Modern application infrastructure is moving toward disposable compute: instances are created on demand, optimized for a specific workload, and retired cleanly. Persistent servers introduce configuration drift, security exposure, and scaling friction.

BeacenAI constructs server instances from policy—purpose-built, hardware-aligned, and memory-resident. The platform assembles only the required operating system components and workload bundles at boot, then discards the environment when finished. This eliminates manual provisioning and avoids the slow decay that makes fleets fragile over time.

The result is a server fleet that behaves like a system: predictable, resilient, and structurally secure—because every runtime is reconstructed from policy, not nursed forward through drift.

Architecture for AI Inference

Inference is dominated by execution discipline, not model size. Tail latency and jitter often come from OS noise, background contention, and persistent configuration sprawl—not GPU capability.

BeacenAI constructs a minimal runtime optimized for the inference task and the exact hardware it runs on. By keeping the execution surface tight, memory-resident, and policy-bounded, inference becomes more deterministic—reducing overprovisioning and improving effective GPU utilization.

- Reduced tail latency through fewer sources of execution jitter

- Higher effective GPU utilization by eliminating OS contention

- Lower overprovisioning because stability is structural, not tuned

- Stronger isolation via policy-defined execution boundaries

Architecture for AI Learning

Learning requires reproducibility at scale. Small differences in drivers, kernels, memory behavior, or system configuration can alter outcomes or cause training runs to fail. Traditional environments accumulate entropy and become harder to trust over time.

BeacenAI prevents this by reconstructing learning nodes from policy. When a node fails or degrades, it is not repaired—it is rebuilt. This is how learning clusters operate continuously without accumulating drift, and how large-scale training becomes operationally sustainable.

- Identical runtime reconstruction across clusters and job runs

- Rapid recovery from node failure through stateless rebuild

- Policy-bound data access and controlled execution surfaces

- Compounding reliability because entropy is structurally removed

As a Desktop

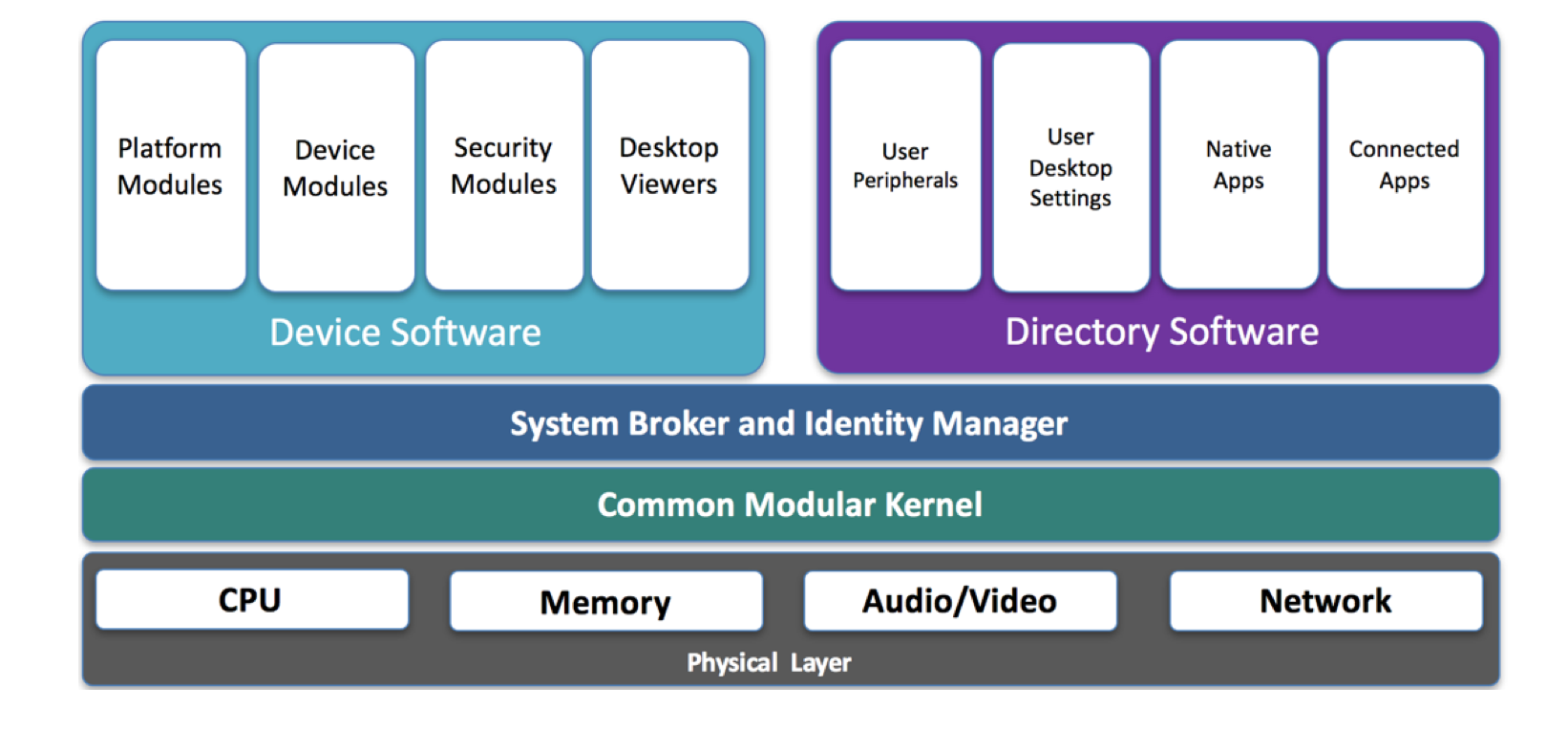

The zStation desktop platform distinguishes itself from conventional Linux distributions through modular, policy-driven composition. Each component is loosely coupled into autonomous modules and assembled dynamically at boot. This enables a desktop environment that adapts to hardware, user role, and security posture—without becoming a long-lived endpoint that drifts over time.

Instead of “managing devices,” BeacenAI constructs compliant desktop sessions—consistent by design, fast to recover, and secure through non-persistence.

Modular Design

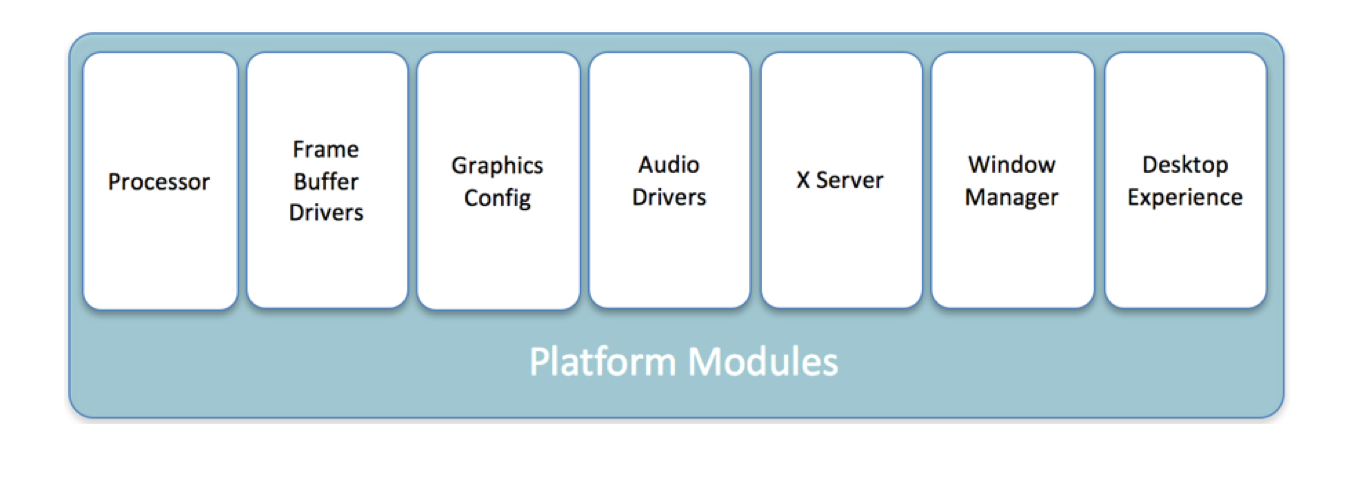

To deliver peak performance and optimized power management, zStation loads hardware-specific kernel objects and drivers at early boot. Rather than bundling every possible driver—many of which are unnecessary—BeacenAI uses hardware detection and policy enforcement to inject only the required platform modules. When required, the system dynamically relinks the kernel, producing a lightweight foundation tailored to the device’s exact specifications.

These intelligent platform modules include the following elements:

- Processor: Power management and kernel-level optimizations specific to the underlying processor architecture.

- Frame Buffer Drivers: GPU support for modern acceleration paths (e.g., OpenGL/OpenCL) and high-performance media.

- Graphics Config: Fine-tuned display configuration for diverse monitors, panels, and deployment environments.

- Audio Drivers: Automatic detection and configuration of one or more audio subsystems present on the platform.

- X Server: Enables the Linux graphical environment, constructed only when policy requires a desktop UI.

- Window Manager: User interface controls and navigation features for the assembled desktop session.

- Desktop Experience: Taskbar, dock, or icon grid selectable by policy based on form factor (tablet, laptop, workstation).

Smart, Simple, Scalable

BeacenAI is the gateway to a future where scale, heterogeneous hardware, and environment interoperability are no longer operational barriers. Infrastructure becomes repeatable because it is constructed from policy—not maintained through drift.

Next Step

Want to see constructed execution in your environment? Start with a walkthrough or explore the technical papers.

A Complete Re-Think

Today’s device-centric computing platforms fail to meet the complex challenges caused by modern mobile multi-platform requirements. To meet these demands, a fresh approach is required—one that decouples applications and data from devices and relieves end users of complex systems and administrative tasks; in short, a complete rethink of the platform itself.

Content-centric systems have long been the nirvana of computer systems engineers; however those requirements are orthogonal to device-centric manifestations.

Combining advanced computing concepts such as (i) in-memory computing, (ii) stateless, (iii) non-persistent, and (iv) policy-driven computing, Beacen vSeries provides an unparalleled combination of security, performance, agility, and flexibility.

Plug-and-Play

Your computer becomes a true plug-and-play appliance, capable of working "out of the box" without the need for any administrative intervention. The installer only has to plug it in and turn it on.

Fully Responsive

As technology continues its steady migration to mobile platforms it is not enough for the application to be the only responsive element. zStation’s modularity guarantees a completely responsive environment.

Secure

vSeries represents a new level of security, reducing if not eliminating attack vectors. Since the filesystem that the operating system resides on is read-only, attachment points for viruses and malware simply don’t exist.

In-Memory

zStation endpoint operating environment operates exclusively in volatile RAM not requiring local storage for any operation. This architecture provides performance not attainable by traditional computer architectures.

Stateless

Remember when your computer was new, everything was fast and it just worked. As it gets older your computer seems slower—that’s because it is. Operating system decay is a real issue. Since zStation is stateless it is new every time you login.

Consistent

Policy-based computing guarantees that the system is deployed in the ideal configuration. Both operating system and application workloads are always in the optimal configuration on all systems.