Products

BeacenAI is the autonomous execution layer for modern enterprise and AI infrastructure—policy-driven, stateless by design, and built to regenerate environments instead of maintaining them.

Platform Overview

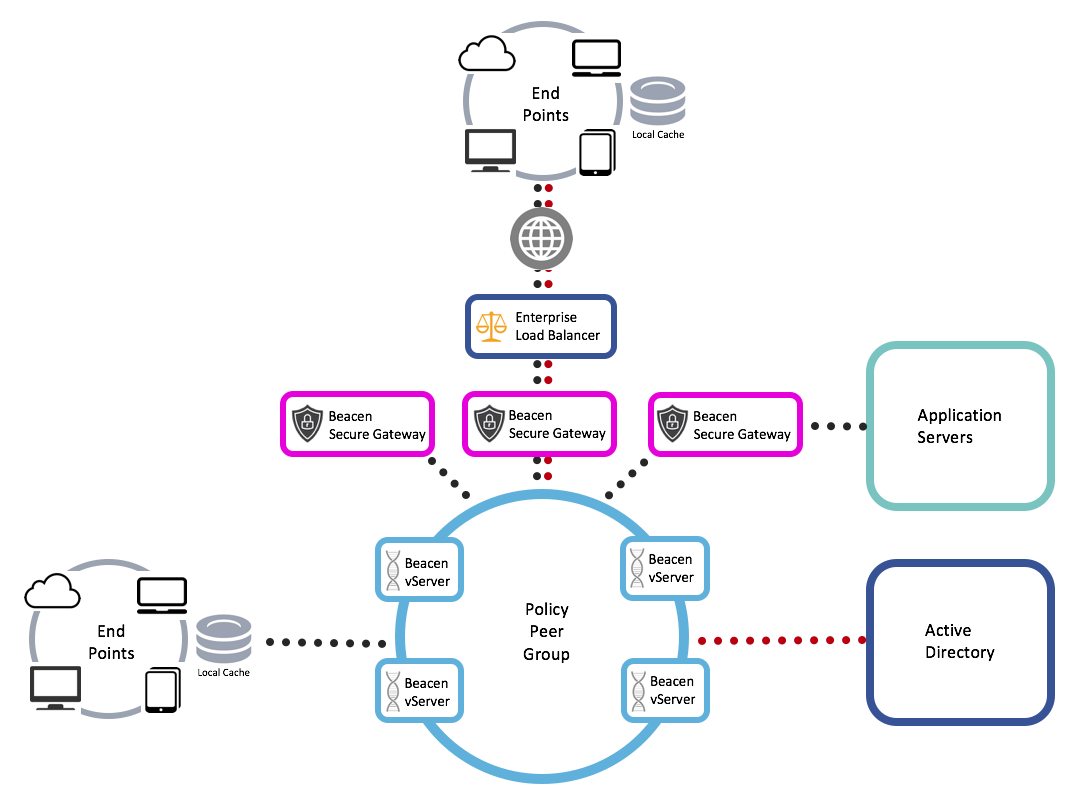

Most infrastructure still runs on a legacy assumption: machines persist. Configurations drift, credentials linger, endpoints accumulate state, and operations become an endless maintenance loop. BeacenAI replaces that model with a different primitive: execution without persistence. Every environment is dynamically constructed from policy, verified at runtime, and torn down when the task is complete.

This is not “automation on top of the stack.” BeacenAI operates at the layer where outcomes are won or lost: the operating environment itself. The platform continuously evaluates identity, device posture, network conditions, mission requirements, and location context to compose only what is needed—no more—and to enforce it continuously.

What BeacenAI Delivers

Stateless execution: reduce breach blast radius, eliminate configuration drift,

and remove the need for local persistent storage on endpoints.

Policy-driven composition: environments are built from intent—role, group, device,

network, geography—then verified continuously.

Autonomous resilience: when nodes fail, BeacenAI regenerates the environment instead

of repairing a broken one.

Operational compression: fewer manual workflows, fewer brittle images, fewer emergency

changes—more predictable execution.

A Unified Execution Layer

The same mechanism that hardens desktops also hardens servers and AI workloads: a controlled, ephemeral runtime where security boundaries, dependencies, and configuration are deterministic. The result is infrastructure that can scale across heterogeneous hardware, cloud, edge, and disconnected/contested environments—without multiplying administrative burden.

BeacenAI decouples applications and workloads from fragile OS and hardware assumptions. Application bundles are delivered by policy, executed in a clean runtime, and retired when complete—creating a platform that is portable, repeatable, and defensible at scale.

Product Modules

Deploy BeacenAI as an integrated platform or adopt modules based on your architecture and timeline.

Go Deeper

If you want the full narrative and the system design, start here.

Want a fast, technical walkthrough?

Tell us your environment (endpoints, AI workloads, data center, edge) and the constraints you can’t compromise on. We’ll map how BeacenAI composes and enforces a stateless execution layer in your stack.